End-to-End Testing Best Practices: Complete 2025 Guide

End-to-end testing ensures your app works seamlessly by validating complete user workflows - from the UI to APIs, databases, and third-party services. It helps catch issues that other testing methods might miss, especially in complex systems.

Key Highlights:

- Purpose: Validates full user journeys (e.g., signing up, making purchases) to ensure reliability and functionality.

- Importance: Prevents failures in critical workflows like checkout or login, saving time, money, and reputation.

- Challenges: Testing across platforms (web, mobile), handling delays, and using realistic data.

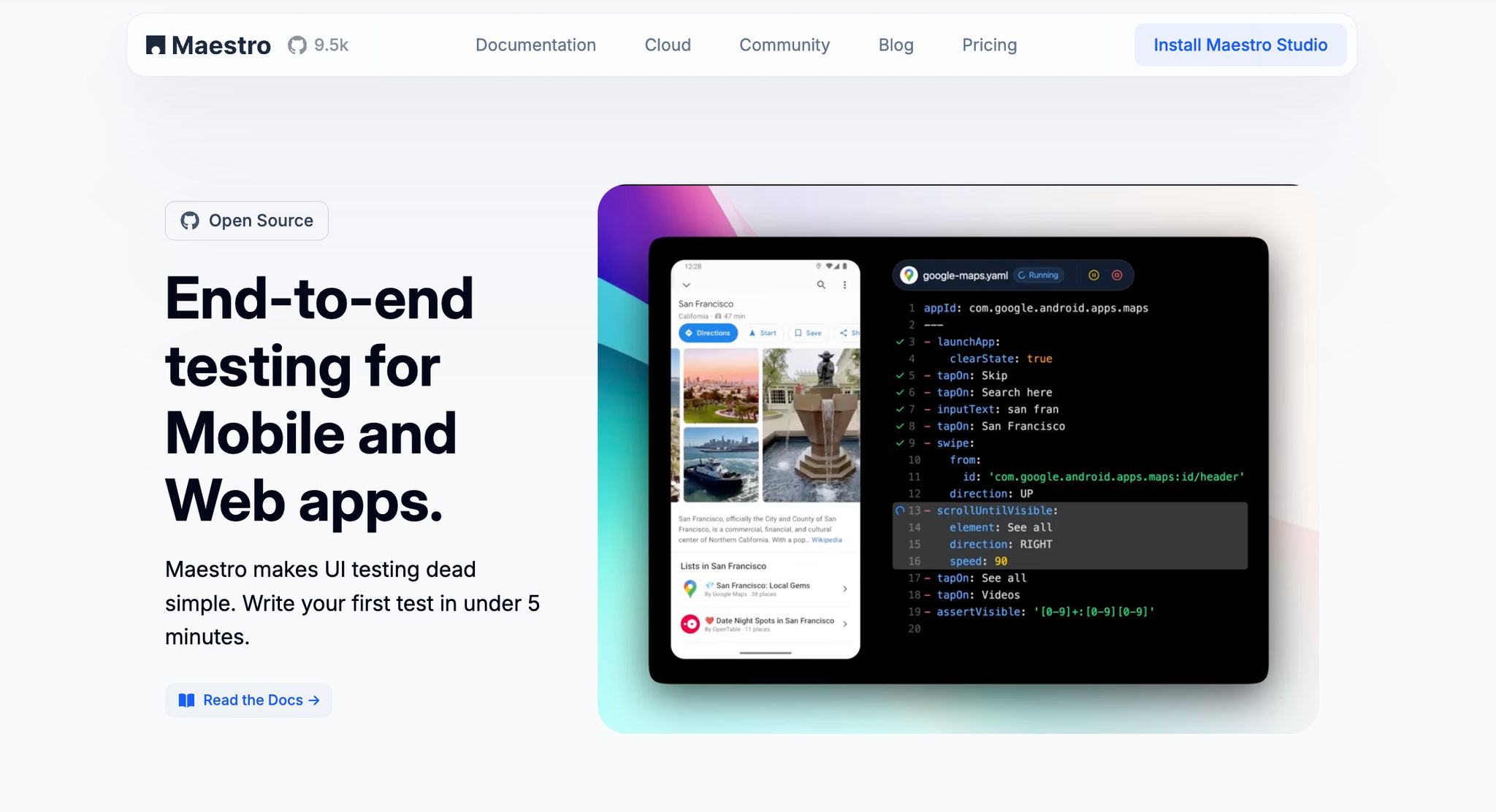

- Solution: Tools like Maestro simplify automation with YAML syntax, cross-platform support, and visual testing interfaces.

Quick Tips for Success:

- Focus on high-impact user flows, like payment processing or login.

- Use dynamic waits and stable selectors to avoid flaky tests.

- Regularly update and maintain test cases as features evolve.

- Integrate tests into CI/CD pipelines for continuous validation.

End-to-end testing isn’t just about finding bugs; it’s about ensuring a smooth experience for your users. Tools like Maestro make this process easier and more efficient.

End to End Testing - Explained

Planning Effective End-to-End Tests

Turning end-to-end testing into a productive process requires careful planning that focuses on key user flows instead of wasting time on scenarios with minimal impact.

To do this effectively, you need to understand your users, prioritize based on potential business risks, and work with data that closely mirrors real-world conditions. This ensures your testing efforts hit the mark, covering the most essential workflows without unnecessary overreach. Start by identifying the user journeys that are central to your business.

Mapping User Journeys

Mapping user journeys is the cornerstone of effective end-to-end testing. It helps you pinpoint the paths users typically follow when interacting with your application. To begin, analyze user analytics, dig into support tickets, and review business priorities to identify the flows that matter most.

The focus should be on high-impact scenarios - like user registration, logging in, payment processing, or data submissions - because these are often tied to revenue or user retention. For example, in an e-commerce app, it’s crucial to map the entire journey from product search to checkout, including scenarios such as applying discount codes or handling payment errors.

Don’t overlook edge cases. Unexpected situations - like invalid data entry, losing connectivity, or users abandoning processes - can reveal critical issues that might otherwise go unnoticed.

Also, consider the different ways user personas interact with your app. A first-time visitor will likely take a different path than a returning customer. Mapping these variations ensures your tests cover a wide range of user behaviors, not just the most obvious ones.

If you’re using Maestro, its declarative YAML syntax simplifies the process of turning these mapped journeys into actionable tests. Each step of the user flow can be defined with clear commands that reflect real actions, making the tests easy to understand and maintain. This groundwork is essential for prioritizing risks and setting up data-driven tests.

Risk-Based Test Prioritization

Not every feature in your application carries the same level of risk, and your testing strategy should reflect that. Focus your efforts on the most critical and frequently used workflows - like login, checkout, or key system integrations - since these are essential for both user satisfaction and business continuity.

Prioritize features whose failure would cause the greatest disruption. For example, a broken login system impacts every user, while a minor issue in a rarely accessed settings page is less urgent.

To make prioritization easier, create a risk matrix that evaluates each feature based on two factors: its business impact and how often it’s used. Features that rank high in both areas - like payment processing in a financial app - should be at the top of your testing list.

It’s also smart to think about worst-case scenarios. What happens during peak usage times? What if an external service goes down? What about unexpected user actions? Testing for these situations can help you uncover potential weak points that could lead to larger system failures.

Given the reality of time constraints, use your risk assessment to decide which tests are essential to run with every deployment (like critical path tests) and which can be scheduled less frequently.

Once you’ve identified the most important features to test, it’s time to ensure your testing data is as realistic as possible.

Using Realistic Test Data

The accuracy of your end-to-end tests depends heavily on the quality of your test data. Using data that closely mimics real-world conditions is essential for validating how your application will perform in production.

Without realistic data - like varying text lengths, special characters, or real-world data relationships - your tests might pass, but the application could still fail in live environments. High-quality data ensures reliable test results and reduces the chance of false positives caused by inconsistencies in data or testing conditions.

To achieve this, establish strong data management practices. Your test environment should always start in a predictable state before each run. While using actual production data might seem like a quick solution, it comes with privacy and security risks. Instead, consider leveraging Test Data Management (TDM) techniques. This could involve anonymizing production data to remove sensitive information or generating synthetic data that reflects real-world characteristics.

AI tools are also changing the game when it comes to test data. They can generate synthetic data that maintains statistical accuracy, covers edge cases, and complies with privacy regulations.

Finally, ensure your test scenarios are consistent by building dedicated configurations - like login credentials, environment setups, and cookies. This way, your tests can reliably simulate real-world conditions every time.

Setting Up Reliable Test Environments

Test Data Management Strategies

Managing test data effectively is key to ensuring smooth and reliable end-to-end testing. A practical approach involves replicating real-world conditions by using past production data. However, before doing so, it’s crucial to sanitize this data to remove any sensitive or confidential information. These practices help create a dependable and consistent testing environment that works seamlessly across different platforms.

Implementing Automation Best Practices

Developing effective automated tests involves creating solutions that can handle unpredictability while staying straightforward enough for teams to understand and modify.

Building Stable Automated Tests

Stability in automated testing starts with recognizing the unpredictable nature of mobile and web applications. Factors like network delays, varying device speeds, and dynamic content can disrupt traditional automation tools. To address this, your framework should be designed to tolerate these variations.

Dynamic waiting strategies are critical for stable tests. Instead of relying on fixed sleep commands - which can either waste time or lead to failures - smart automation waits for specific conditions. For example, it pauses until elements appear, animations finish, or network requests complete before moving on to the next step.

Maestro simplifies this process with built-in tolerance for delays, eliminating the need for manual retry logic. It automatically handles common issues, such as UI elements not appearing immediately or screen taps not registering on the first attempt.

Element identification strategies are another cornerstone of stability. Instead of using fragile selectors that break when minor UI changes occur, strong tests rely on multiple identification methods. This could include combining text content, accessibility labels, and relative positioning to ensure elements are reliably targeted.

Error recovery mechanisms are also essential. These allow tests to adapt when unexpected events occur, such as popup dialogs, network timeouts, or permission requests. By anticipating these scenarios and building responses into your test flows, you can keep tests running smoothly.

These practices form the backbone of a reliable and efficient automation workflow.

Benefits of Declarative Syntax

Once test stability is in place, adopting a declarative approach can simplify test creation significantly. Traditional automation frameworks often require deep programming knowledge and complex setups. Declarative syntax, on the other hand, focuses on what needs to be tested rather than how to implement the testing logic.

Maestro's YAML-based approach is a great example of this. It allows teams to write tests in natural, easy-to-read language, making automation accessible even to those without programming expertise. For instance, a test that opens an app, navigates to a specific screen, and verifies its content can be written in just a few lines of YAML.

This approach offers several advantages:

- Faster test creation: Teams can focus on crafting test scenarios instead of wrestling with complex APIs.

- Lower maintenance effort: Updating tests becomes as simple as tweaking clear, straightforward instructions, even when features change.

- Reduced learning curve: New team members can start contributing to test automation within days rather than spending weeks learning traditional tools.

Overall, declarative syntax makes test automation more approachable and efficient for everyone involved.

Using Maestro Studio for Visual Testing

Visual testing tools make automation easier, especially for non-technical users. Maestro Studio provides a user-friendly desktop interface that enables anyone to create and manage tests without needing to write code or use command-line tools.

The process is intuitive: users interact with their applications naturally, and the tool records these interactions as test steps. This point-and-click test creation allows QA professionals to build detailed test suites simply by using the app like an end user.

Maestro Studio includes MaestroGPT integration to help generate commands and answer questions during test authoring. This reduces the manual effort required to create reliable tests and enhances their durability over time.

Workspace management capabilities keep everything organized. Teams can collaborate on test creation and maintenance through shared workspaces, ensuring consistency across projects and team members.

The inline command generation feature adds transparency, showing how visual interactions translate into automation commands. This helps users understand the underlying logic while keeping the process simple.

Finally, real-time test execution lets users run tests as they build them. This immediate feedback makes it easier to catch and fix issues before tests are added to larger suites or integrated into CI/CD pipelines.

Note: While Maestro Cloud supports parallel execution across multiple devices, the local CLI runs tests sequentially by default. Parallel execution requires either cloud infrastructure or the --shards flag with multiple connected devices.

Leveraging Maestro's Built-In Resilience Features

Unlike traditional frameworks that require manual sleep() or wait() commands, Maestro includes built-in flakiness tolerance and automatic waiting to handle dynamic UIs without manual intervention. This means your tests remain stable even when UI elements don't appear immediately or screen taps don't register on the first attempt. Design your test flows to trust Maestro's intelligent waiting - avoid adding unnecessary delays that slow down execution.

sbb-itb-e343f3a

Maintaining End-to-End Tests

Keeping end-to-end tests up to date is a constant effort, especially as features and interfaces evolve. The following practices align well with earlier discussions on planning and automation.

Continuous Testing in CI/CD Workflows

Incorporating end-to-end tests into your continuous integration and deployment (CI/CD) pipelines creates automated checkpoints, ensuring every code change is validated against real-world user scenarios before it reaches production.

Here’s a practical approach:

- Run smoke tests on every pull request to catch critical issues early.

- Execute comprehensive test suites nightly for deeper validation.

- Perform final checks just before deployment to ensure everything runs smoothly.

This layered system strikes a balance between speed and thorough testing. However, success depends on fast, reliable test execution and clear reporting. Slow or unstable tests can bottleneck deployments and undermine team confidence. Tools like Maestro help by handling delays effectively, reducing test instability. This complements earlier strategies for building reliable automated tests with Maestro.

Equally important is transparent test reporting. Integrating notifications into team communication tools ensures everyone is promptly alerted when tests fail, allowing issues to be addressed quickly.

Regular Test Case Maintenance

As your application evolves, so must your tests. Changes to functionality, features, or user interfaces can render existing tests outdated or unreliable, making regular upkeep essential.

Routine reviews help ensure your tests stay relevant. This includes updating coverage, revising scenarios, and removing redundant cases. Using version control for test assets simplifies tracking changes and makes it easy to revert if needed.

For updates, declarative YAML files can be a game-changer. They’re straightforward to edit, often allowing non-technical team members to contribute to maintenance tasks. This speeds up updates and keeps your tests aligned with the application.

Automated test validation also plays a role. Running tests regularly in staging environments can identify patterns, such as frequently failing scenarios, signaling areas that need attention. Keeping an eye on these patterns ensures your tests remain robust and effective.

Avoiding Common End-to-End Testing Pitfalls

End-to-end testing often encounters challenges that can throw automation efforts off track. Recognizing these issues and addressing them effectively ensures your testing process remains reliable and efficient.

Handling Test Flakiness

Test flakiness is one of the most frustrating hurdles in end-to-end testing. When tests pass one day and fail the next without any changes to the code, it creates confusion and undermines trust in automation.

The primary culprits behind flakiness are timing issues and environmental inconsistencies. Factors like network delays, slow-loading elements, and differences in device performance introduce unpredictable conditions that traditional testing frameworks struggle to manage.

To combat this, replace fixed sleep commands with dynamic waits. Dynamic waits adjust to real-time conditions, pausing only until elements appear, data loads, or animations finish. This approach minimizes unnecessary delays while improving test reliability.

Maestro tackles flakiness directly with automated wait strategies. Its ability to handle common delays, such as inconsistent UI interactions, ensures tests remain resilient even when minor issues occur. This reduces false failures and keeps your automation efforts on track.

Test design plays a big role in avoiding flakiness too. Steer clear of brittle selectors like pixel coordinates or fragile element hierarchies that can break when layouts change. Instead, use stable identifiers that persist across updates to maintain test stability.

Another key factor is environment isolation. When tests share databases or services, they can interfere with each other, leading to unpredictable outcomes. Running tests in clean, isolated environments prevents cross-contamination and ensures consistent results.

By addressing flakiness, you not only enhance test reliability but also lay the groundwork for better overall coverage.

Maintaining Complete Test Coverage

Gaps in test coverage leave critical user flows open to undetected bugs. Achieving thorough coverage isn’t just about hitting high percentages - it’s about focusing on the right scenarios.

Don’t limit tests to happy paths. Include edge cases, such as error handling and network interruptions, to ensure your application performs well under all conditions.

Prioritize tests based on risk. High-value user journeys, like payment processing or sensitive data operations, deserve extra attention. This ensures that the most critical parts of your application are well-protected.

Cross-platform testing adds complexity. Features that work seamlessly on iOS may behave differently on Android, and desktop functionality might not translate perfectly to mobile. Testing core workflows across all supported platforms ensures consistent user experiences.

Regular coverage audits help identify gaps that creep in over time. As new features are added and old ones evolve, test coverage can become uneven. Audits ensure you catch blind spots before they become major issues.

User analytics can also guide test planning. By analyzing real usage patterns, you can prioritize scenarios that reflect actual user behavior, rather than relying on assumptions about how the application should be used.

Preventing Outdated Test Suites

Outdated test suites can become a liability instead of a safeguard. They may produce false failures, miss genuine issues, and eventually get ignored or disabled, defeating their purpose.

This problem is especially common in fast-paced development environments. As features evolve and user interfaces change, test suites can quickly fall out of sync with the current application.

To keep test suites relevant, schedule regular reviews and updates. Automated validation helps identify outdated tests by flagging patterns of consistent failures. Tests that repeatedly fail for the same reasons often need updates rather than bug fixes.

Collaboration between developers and testing teams is essential. When developers share upcoming interface changes or feature updates, testers can proactively adjust their scripts to avoid breakage. Similarly, testers can alert developers to potential impacts on existing tests.

Retiring redundant tests is another important step. As features are replaced or improved, some tests become unnecessary. Removing these outdated tests reduces clutter and ensures the suite focuses on current functionality.

Building a Scalable Testing Strategy

Creating a testing strategy that grows with your application and team is essential for maintaining quality and efficiency. The practices outlined in this guide work together to build a reliable framework that delivers consistent results, even as your needs evolve.

Key Takeaways

To achieve scalable and reliable testing, focus on aligning your planning, automation techniques, and continuous testing practices. Prioritize critical workflows and high-risk features that directly affect the user experience and business goals.

Scalable testing relies on stable automation. Incorporate dynamic waits, reliable selectors, and isolated environments to minimize flakiness and ensure dependable outcomes. Embedding continuous testing into your CI/CD pipeline speeds up feedback loops and boosts confidence in deployments.

As your application evolves, updating your tests regularly is vital. Using declarative syntax simplifies the process of creating and maintaining tests, making them accessible to everyone on the team. This accessibility is a key factor in scaling testing efforts effectively across larger teams.

These strategies lay the groundwork for a tool that embodies these principles - Maestro.

Why Choose Maestro

Maestro is designed to help you scale your testing strategy with ease, addressing the challenges of modern testing requirements. It tackles common issues like test flakiness by including built-in tolerance for delays and UI inconsistencies, eliminating the need for manual sleep commands.

Its declarative YAML syntax ensures that test creation is straightforward and accessible, even for team members without a technical background. This approach makes tests easy to read and maintain, fostering collaboration and enabling scalable testing across diverse teams.

With cross-platform support, Maestro allows you to test Android, iOS, and web applications using consistent syntax and functionality. This unified approach simplifies both the learning curve and ongoing maintenance, ensuring stable testing standards across all platforms.

For those who prefer visual tools over command-line interfaces, Maestro Studio offers a desktop application that enables users to create, edit, and run tests without writing code. This feature democratizes test creation, making it possible for anyone on your team to contribute.

When local testing reaches its limits, Maestro Cloud provides enterprise-grade capabilities. With parallel test runs across multiple devices, faster feedback cycles, and detailed reporting integrated into CI workflows, your testing strategy can scale effortlessly as your application and team grow - all without requiring significant architectural changes.

FAQs

How does end-to-end testing enhance the user experience of an application?

End-to-end testing plays a key role in refining the user experience by mimicking real-world scenarios and interactions. This process helps uncover potential issues before they ever reach your users. It ensures that every part of your application - whether it's the front-end, back-end, or the layers connecting them - works together smoothly.

By identifying bugs, performance bottlenecks, or integration hiccups early on, end-to-end testing contributes to a more reliable and user-friendly application. This not only boosts confidence in your software's ability to handle real-life situations but also results in satisfied users and fewer interruptions.

How can I manage test data effectively to ensure accurate and reliable end-to-end testing?

To make your end-to-end tests both dependable and practical, it's crucial to use test data that closely resembles real production data. This approach helps replicate actual scenarios, making it easier to identify issues that might otherwise slip through the cracks.

When creating test data, aim to cover a broad spectrum of use cases, including rare and edge cases. It’s also a good idea to automate the process of generating and cleaning up test data. Automation not only saves time but also ensures consistency, keeping your tests efficient and reliable in the long run.

How does Maestro make it easier for both technical and non-technical team members to create and manage end-to-end tests?

Maestro makes creating and managing end-to-end tests easier by providing a simple interface and tools that work for everyone - whether you're a developer or not. With features like visual workflows and minimal need for coding, it allows teams to concentrate on testing instead of wrestling with complicated setups.

For developers and technical users, Maestro offers strong automation features and smooth integration with popular frameworks. At the same time, its accessible design and easy-to-use functionality let non-technical team members actively contribute to the testing process. This collaborative approach speeds up feedback, increases test coverage, and boosts the overall quality of your software.

We're entering a new era of software development. Advancements in AI and tooling have unlocked unprecedented speed, shifting the bottleneck from development velocity to quality control. This is why we built — a modern testing platform that ensures your team can move quickly while maintaining a high standard of quality.

Learn more ->