How to Test User Flows in Mobile Applications

Testing user flows in mobile apps ensures users can navigate and complete tasks smoothly. Poor navigation leads to app abandonment - 66% of users uninstall apps after encountering issues. Here's how you can refine user flows:

- Understand user flows: Visual maps of how users navigate your app.

- Why test them? Identify usability problems like navigation bottlenecks and confusing gestures.

- Key steps: Map user flows, set goals, test across devices, and balance manual and automated testing.

- Tools to use: Maestro (YAML-based automation), analytics platforms, and cloud-based device testing.

Quick tip: Combining manual testing for usability with automation for repetitive tasks offers the best results. Start early to save costs and improve user satisfaction.

Mobile Usability Testing Best Practices

How to Prepare for User Flow Testing

Proper preparation is key to ensuring user flow testing identifies usability issues effectively. The effort you put in before testing begins can make all the difference in catching potential problems or overlooking them.

How to Map User Flows

Visualizing user flows lets you pinpoint actions, decisions, and exit points, ensuring that your testing covers every critical aspect.

"User flows help you visualize how users navigate within your app or website, helping you optimize screen layouts for intuitive, goal-oriented interaction." – Justinmind

Start with clear objectives and scope. Before sketching out any diagrams, define your goals for each user flow. Whether it’s mapping the onboarding process, checkout experience, or feature discovery journey, having a specific focus ensures your efforts remain actionable and relevant.

Stick to one objective per flow and use a consistent legend. Each user flow should address a single goal to avoid confusion. For example, keep flows for user registration, password recovery, and profile updates separate. Use a clear legend to represent elements - rectangles for screens, diamonds for decisions, and arrows for user movement.

Real-world examples highlight how mapping improves design. Consider a delivery app that used wireframes connected by orange lines to map the journey from language selection to address confirmation. Similarly, an e-commerce flow charted multiple entry points - like product banners and marketing campaigns - leading to the same order confirmation endpoint.

Keep flows straightforward. Ensure user paths move in one direction, avoiding circular or backward loops. This makes it easier to identify where users might get stuck or confused.

Validate with real users. Once your flow diagrams are complete, test them with actual users. Observing their behavior can reveal gaps or missteps between your intended design and how users truly navigate. This feedback allows you to refine your flows and improve the overall experience.

With your flows mapped out, the next step is to set specific goals to guide your testing.

Setting Test Goals and Scope

Well-defined, measurable goals help you focus on the most critical user paths.

Engage stakeholders early. Collaborate with product managers, designers, developers, and business leaders to define success. Their input helps you identify which user flows are most impactful for achieving business objectives.

Prioritize the most important flows. Not all user paths hold equal value. Focus on those that drive revenue or retention. For instance, an e-commerce app should prioritize testing the checkout process over secondary features like wish lists.

One example comes from an e-commerce platform that targeted its testing on checkout flows due to high cart abandonment rates. By focusing on frequent shoppers and setting measurable goals - such as completing checkout in under three minutes - they achieved impressive results: a 45% boost in conversions, a 30% drop in cart abandonment, a 60% reduction in user errors, and a 25% rise in satisfaction.

Set measurable success criteria. Avoid vague goals like "improve user experience." Instead, aim for specific targets, such as reducing checkout time to under two minutes or achieving a 90% task completion rate for new user registration.

Align with your audience. Tailor your testing goals to your users’ needs. If your app serves busy professionals, focus on speed and efficiency. For older users, prioritize simplicity and clear navigation.

"User research reduces the likelihood of building something that doesn't meet user needs, but only when everyone knows what those are." – Susan Farrell

Break complex goals into smaller tasks. Large objectives can feel overwhelming. Break them down into smaller, testable parts. For example, instead of testing the entire onboarding flow, focus on specific steps like email verification, social login options, or profile completion.

Once your goals are set, it’s time to plan device coverage and testing methods to ensure your flows work seamlessly across different scenarios.

Planning Device Coverage and Test Methods

Testing across various devices ensures your user flows perform consistently, no matter the hardware or software.

Start with your audience’s devices. Use analytics to determine the most common devices and operating systems among your users. For example, you might need to prioritize iOS over Android or focus on specific models based on your user demographics.

Consider this: nearly 90% of mobile users spend their time on just three to four apps. Additionally, 67% of users delete apps due to unclear navigation or insufficient information. Testing across different devices and screen sizes is crucial to avoid these pitfalls.

Balance simulators with real devices. Simulators are great for early-stage testing, but they can’t replicate real-world conditions. Testing on physical devices reveals how your app handles hardware constraints, network changes, and natural user interactions.

Test under varied network conditions. Your app’s performance on different connections - Wi-Fi, 4G, or slower networks - matters. Nearly half of users uninstall apps that perform poorly under slow conditions.

Account for screen diversity. A layout that looks great on a large iPhone might feel cramped on a smaller device. Ensure your user flows remain intuitive across a range of screen sizes and resolutions.

Combine manual and automated testing. Manual testing is invaluable for spotting usability issues and unexpected behaviors, while automation is ideal for repetitive tasks and regression testing. A hybrid approach - using automation for predictable flows and manual testing for exploratory scenarios - often provides the best results.

Manual and Automated Testing Methods

With all the groundwork in place, the next step is to execute tests using both manual and automated methods. The key is not choosing one over the other but knowing how to combine them effectively. When used together, these approaches ensure thorough validation of user flows, each contributing its strengths to the process.

Manual Testing Methods

Manual testing involves real people directly interacting with your app to assess how it functions and feels. This hands-on method shines when human judgment and intuition are needed most.

Manual testing is particularly effective for spotting usability issues and evaluating how the app performs in real-world conditions across various devices. For example, testers can identify problems like unclear error messages or awkward button placement - issues that automated scripts might overlook. This is especially important for complex user interactions that require a human touch to evaluate.

Unlike automated tests, manual testing provides qualitative feedback. Testers can point out when a user flow technically works but feels clunky or confusing. This feedback is invaluable for improving the overall user experience and reducing app abandonment rates.

However, there are challenges. Manual testing is slower, requires more effort, and is prone to human error. Testers might skip steps or perform tests inconsistently, and scaling manual testing is difficult due to time and resource constraints.

To counter these limitations, thorough documentation is essential. Clear records of test steps, expected outcomes, and any deviations help maintain consistency across testers and testing cycles.

While manual testing uncovers the nuances of user experience, automated testing ensures precision and scalability for repetitive tasks.

Automated Testing with Maestro

Automated testing relies on tools and scripts to run tests without human involvement, making it ideal for repetitive tasks and regression testing. Maestro simplifies this process with its user-friendly, YAML-based approach to mobile UI automation.

Why YAML? Maestro uses a declarative YAML syntax, which makes creating test scripts straightforward - even for team members without deep technical expertise. You can define user flows in a structured, readable format that outlines the actions to be performed.

Handles timing variations seamlessly. Maestro is designed to tolerate common timing issues, so you don’t need to spend hours configuring custom wait conditions.

Cross-platform compatibility. Whether you're working with Android, iOS, React Native, Flutter, or web applications, Maestro has you covered. This unified approach reduces the learning curve and simplifies maintenance, allowing your team to apply the same testing framework across multiple platforms.

Quick setup and iteration. With a single binary installation, Maestro is easy to set up and use. Its simplicity supports rapid test development and execution, making it an excellent fit for continuous integration workflows.

Automated testing is all about consistency and scale. Once configured, automated tests run the same way every time, eliminating the risk of human error in repetitive tasks. They can also be scaled to run multiple tests simultaneously. The downside? Automated tests require an upfront investment in setup and ongoing maintenance as your app evolves. They also struggle to capture usability issues that depend on human intuition.

| Testing Aspect | Manual Testing | Automated Testing |

|---|---|---|

| User Experience | Ideal for assessing usability and human-centric issues | Limited in evaluating user experience |

| Scalability | Time-intensive; best for UI tests needing human insight | Efficient for large-scale, repetitive tasks |

| Cost Efficiency | Better for complex, infrequent tests requiring detail | Cost-effective for repetitive regression testing |

| Test Coverage | Flexible but less efficient for extensive test scenarios | Broad coverage for repetitive tasks |

Finding the Right Balance

Experts suggest an 80/20 split between automated and manual testing for optimal results. This strategy can reduce testing costs and time by up to 70% while ensuring thorough coverage.

- Use automation for regression testing, performance checks, and repetitive user flows where consistency is critical.

- Reserve manual testing for exploratory scenarios, usability evaluations, and complex interactions that benefit from human insight.

Your project's needs will dictate the balance. Apps with frequent updates lean heavily on automation, while those prioritizing a standout user experience may require more manual testing. Keep in mind that poor-quality apps can cost businesses up to $2.49 million in lost revenue, and half of mobile users won’t even consider an app with a three-star rating. Balancing manual and automated testing is not just about efficiency - it’s about delivering an app that users trust and enjoy.

User Flow Testing Best Practices

Strategic planning and ongoing adjustments are key to effective user flow testing. These approaches ensure your tests are thorough and lead to better app usability.

Testing on Real Devices and Emulators

Real devices and emulators each play a distinct role in testing, and knowing when to use them can mean the difference between catching critical errors and releasing an app with hidden flaws.

Emulators are great for early testing, while real devices are essential for final validation. During the initial development stages, emulators provide quick feedback on code changes. They're easy to set up, reset quickly, and work well for basic debugging. However, they can't replicate real-world conditions accurately.

Real devices, on the other hand, are indispensable for testing hardware-dependent features like GPS, cameras, microphones, and sensors. They offer accurate performance measurements and reveal issues like touch sensitivity, readability under different lighting, and hardware-specific performance bottlenecks. For example, LinkedIn's limited use of real device testing led to quality issues that could have been avoided.

"While virtual devices are good for basic validations, to really validate that an application works, you need to run tests against real devices." - Eran Kinsbruner, Author, Perfecto.io

Adopt the 90/70/100 rule for balanced testing. A typical testing setup includes:

- 90% virtual devices for pre-commit checks

- 70% virtual and 30% real devices for multi-merge validation

- 100% real devices for final testing to ensure the app delivers a seamless user experience.

Cloud-based device testing services simplify access to a variety of real devices without the hassle of maintaining physical hardware. These platforms allow you to test across multiple device models, operating systems, and network conditions remotely.

| Feature | Emulators | Real Devices |

|---|---|---|

| Hardware Simulation | Limited simulation | Full hardware access |

| Network Testing | Basic network simulation | Real-world conditions |

| Performance Accuracy | Approximate metrics | Precise measurements |

| Setup Speed | Fast setup and reset | Slower but realistic |

| Best Used For | Early debugging | Final validation |

Next, incorporate user feedback to refine your testing even further.

Adding Feedback and Monitoring

User feedback is a powerful tool for continuous improvement. The best apps don't just test user flows - they monitor them constantly and evolve based on real user behavior.

Prompt feedback at key moments. Use in-app surveys, rating requests, or feedback forms at critical points, like after a purchase or when users face potential friction. Apps with strong feedback loops report 70% higher engagement, and those that act on feedback see a 300% boost in customer satisfaction. Timing is crucial - most users prefer sharing feedback soon after interacting with a feature, and making it easy to give feedback can increase response rates by 50%.

Analyze engagement data to identify problem areas. Tools like Google Analytics or Mixpanel can show which user flows are working and which need improvement. Combining this data with A/B testing can lead to significant gains - conversion rates often increase by up to 49%, with some tests achieving improvements as high as 300%.

Close the feedback loop by acting on user input. Inform users about changes made based on their suggestions. This builds trust and loyalty, encouraging more feedback. Research shows that 83% of users are more likely to provide feedback again if they feel their input is valued.

"Get to know the users you add to your beta program. If you are going to build and iterate on products with their feedback in mind, you need to understand who they are and if they represent your target audience." - Ashton Rankin, Kik Product Manager

Establish clear processes for handling feedback. Teams that prioritize feedback cycles often see faster bug fixes - up to 40% quicker - and can reduce time-to-market by 15–20%.

Using Data to Improve User Flows

Beyond direct feedback, data analytics offers valuable insights into user behavior, helping you identify and fix hidden issues. Combining user feedback with analytics provides a complete picture of what users do and why.

Track engagement and drop-off points. Screen view tracking highlights where users engage the most and where they abandon their journey. Pairing this with session recordings can reveal exactly where and why user flows break down. For instance, with 53% of users abandoning apps that take more than three seconds to load, monitoring performance metrics like load times and crash reports is essential.

Segment user data to uncover unique behaviors. Different user groups often navigate apps in distinct ways. By analyzing segmented data and reviewing session recordings, you can discover unconventional user paths and optimize them. Airbnb used this approach to improve its booking process, boosting conversions by 10%. Similarly, Spotify uses analytics to tailor playlists, increasing engagement and retention.

Combine funnel analysis with session recordings. High drop-off rates in funnels often point to specific problems. For example, one financial app found that users abandoned loan applications at the income verification stage. Heatmaps and session recordings showed users struggled with the 'Upload Documents' feature, especially on older devices.

Correlate user behavior with technical performance. A high bounce rate paired with slow response times often signals performance issues. Addressing these can lead to immediate improvements in user flow completion rates.

Using data effectively ensures smoother user flows and keeps users engaged.

sbb-itb-e343f3a

Tools for Mobile User Flow Testing

Using the right tools for mobile user flow testing can make all the difference. They help identify issues early on and keep your app running smoothly. By combining effective tools with best practices, you can streamline the entire testing process and ensure a seamless user experience.

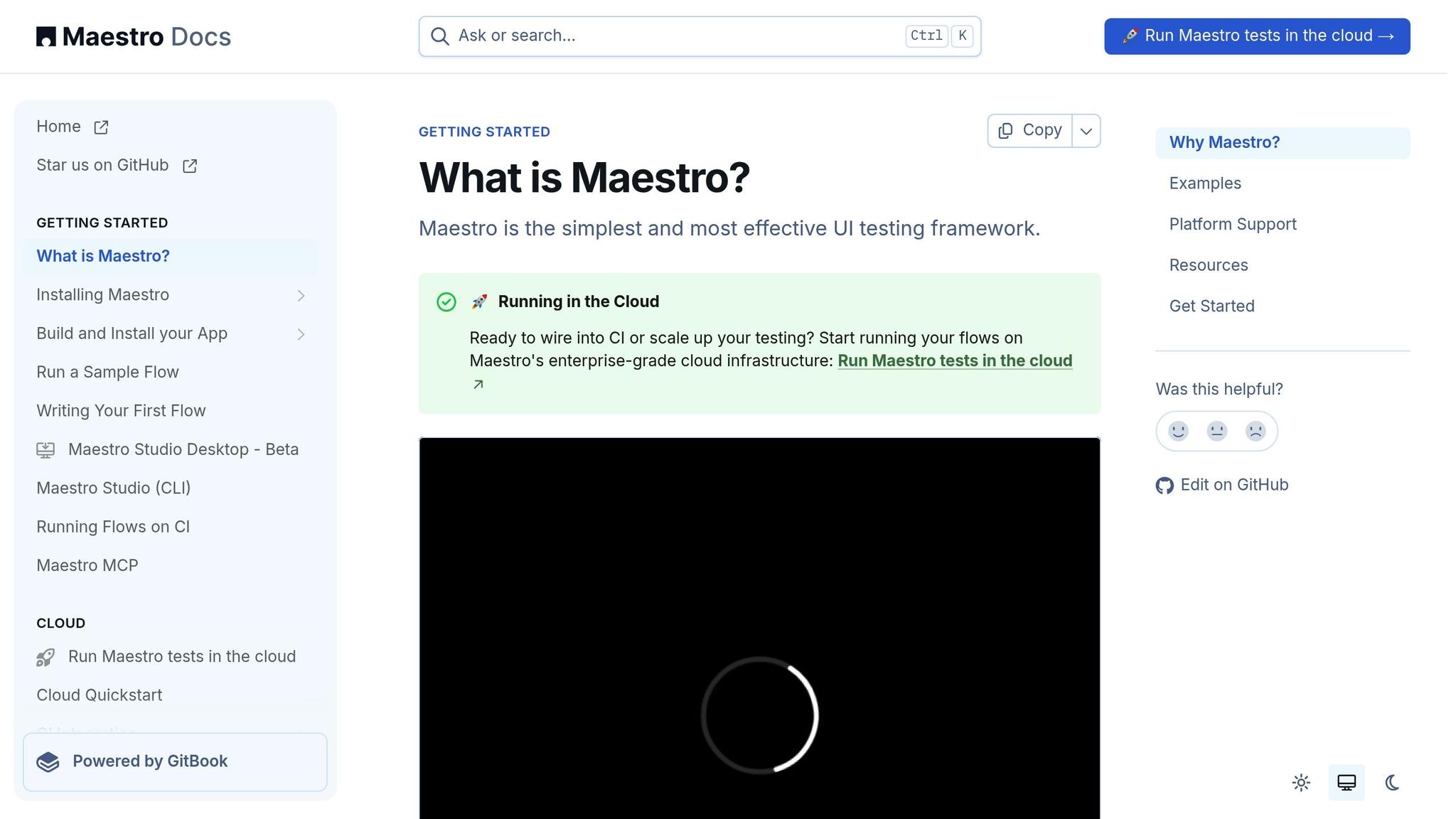

Maestro Features and Benefits

Maestro is a straightforward and efficient framework for mobile UI testing. Its YAML-based setup simplifies test creation, making it accessible to both technical and non-technical team members. This approach eliminates much of the complexity that typically comes with mobile automation.

Maestro supports multiple platforms, including Android, iOS, native apps, React Native, Flutter, and WebViews. This means your team can focus on creating tests rather than juggling different tools. It also automatically handles common issues like test delays, network inconsistencies, and device performance variations - removing the need for frequent manual adjustments.

With Maestro Studio's interactive GUI, coding becomes a visual process. Users can create flows, identify element IDs, and generate commands without needing extensive programming knowledge. For example, consider this simple contact creation flow written in YAML:

appId: com.android.contacts

---

- launchApp

- tapOn: "Create new contact"

- tapOn: "First Name"

- inputText: "John"

- tapOn: "Last Name"

- inputText: "Snow"

- tapOn: "Save"

This clear and readable format allows even beginners to write test flows with ease.

Maestro also speeds up UI test creation - reducing the time by more than 10× - and launches apps in just 12 seconds, compared to the usual 24 seconds. It integrates seamlessly with popular CI/CD platforms like GitHub Actions, Bitrise, Bitbucket, and CircleCI. Plus, its Maestro Cloud service eliminates the need for local emulator setups by running tests on cloud devices, simplifying your CI/CD workflows.

The framework includes advanced features like AI-assisted element identification, automated test recommendations, JavaScript injection, HTTP request support, automatic screenshot capture, and a CLI that re-runs tests automatically. These capabilities enhance your testing process, ensuring thorough coverage across different environments.

Additional Tools and Resources

While Maestro covers the core automation needs, combining it with other tools can elevate your testing strategy. Here are some additional resources to consider:

- Device farms and cloud testing services: These platforms provide access to a wide range of devices, operating systems, and network conditions without the need to maintain physical hardware.

- Analytics platforms: Tools like Google Analytics, Mixpanel, and Firebase help you understand user behavior by tracking interactions, identifying drop-off points, and measuring conversion rates.

- Performance monitoring tools: These tools monitor real-time app performance, helping you detect bottlenecks that may not appear during functional testing but could impact the user experience.

- Session recording and heatmap tools: By capturing real user interactions, these tools reveal unexpected usage patterns and friction points, helping validate test scenarios and discover new testing paths.

- Feedback collection platforms: Tools such as in-app surveys, feedback widgets, and user interview platforms allow you to gather direct input from users during beta testing and after release.

- Version control and test management systems: These systems help you organize test flows, track changes, manage schedules, and provide reporting dashboards for stakeholders.

Conclusion

Testing user flows in mobile apps isn’t just a technical task - it’s a critical step toward ensuring your app’s success. Consider this: 80% of users delete or uninstall apps that don’t meet their expectations, and 48% abandon apps that are slow. When 66% of users uninstall apps or switch to competitors after encountering performance issues, nailing your user flows from the beginning isn’t optional - it’s essential.

This guide has walked you through the key steps of user flow testing, from mapping out user journeys and defining test goals to leveraging both manual and automated testing methods. The takeaway? Early, precise testing is your best ally. As Ilia Tseliatsitski, Quality Assurance Lead at SolveIt, puts it:

"The best practice is to implement testing during the discovery stage while exploring project requirements. By identifying flaws in the requirements early on, the necessary corrective measures can be limited to textual modifications in documentation, resulting in reduced expenses."

Starting early not only reduces costs but also ensures that users get the seamless experience they demand.

And when it comes to tools, Maestro stands out. With its YAML-based framework, Maestro makes automating user flow tests straightforward for both technical and non-technical teams. The results speak for themselves: Cleevio saved 19 hours in the first month and a total of 208.8 hours by the sixth month using Maestro. David Zadražil, CEO at Cleevio AI Automations, highlights its value:

"Our belief is simple: AI should empower people, not replace them. We're not preaching some abstract future, we've implemented it ourselves. We don't sell something we wouldn't use. It's about freeing people from routine so they can focus on high-impact work."

Ultimately, combining thoughtful planning, reliable tools, and consistent testing practices lays the groundwork for app success. Remember, user flow testing isn’t a one-and-done task - it’s an ongoing process. Apps evolve, user expectations shift, and new devices emerge. By embracing robust testing strategies and tools like Maestro now, you’re setting your app up for long-term success in an ever-changing market.

FAQs

What’s the difference between manual and automated testing, and how can they work together to test user flows in mobile apps?

Manual testing takes a hands-on approach, where testers mimic real user interactions to assess an app’s usability, design, and overall experience. This method shines when it comes to spotting subtle issues in UI/UX and is particularly effective for exploratory testing.

On the flip side, automated testing relies on tools to handle repetitive tasks quickly and consistently. It’s perfect for tasks like regression testing, performance evaluations, and testing on a larger scale. This approach not only saves time but also ensures consistency throughout multiple test cycles.

By combining these two approaches, you get the best of both worlds. Manual testing helps uncover detailed user experience issues, while automation ensures efficiency and reliability for repetitive tasks. Together, they offer comprehensive coverage for testing user flows in mobile apps.

How can user feedback and analytics help improve mobile app user flows?

The Role of User Feedback and Analytics in Improving Mobile App User Flows

Understanding how users navigate your app is crucial, and that's where user feedback and analytics step in. By examining data such as user paths and session durations, you can pinpoint the moments where users encounter difficulties. This insight helps you fine-tune navigation and eliminate friction points, making the app experience more seamless.

Using tools that track engagement metrics can reveal how users interact with different features of your app. For example, you can see which screens hold their attention and which ones they abandon quickly. These insights are invaluable for optimizing the user journey.

Adding in-app feedback options, like quick surveys or prompts, allows you to hear directly from users in real time. When you combine this feedback with analytics, you can make iterative updates to key features, such as onboarding flows or overall usability. This approach ensures your app keeps pace with user needs, delivering a smoother and more enjoyable experience.

What are the biggest challenges in testing user flows on mobile apps, and how can they be resolved to improve the user experience?

Testing user flows in mobile apps can be tricky, thanks to challenges like device fragmentation, varying operating systems, network inconsistencies, and UI/UX differences. These variables often result in unpredictable app behavior, making the testing process more demanding.

To tackle these hurdles, it's important to test across a variety of devices and OS versions to mimic real-world scenarios. Start by focusing on the most critical user flows to make the best use of your testing resources. Leveraging automation tools can simplify repetitive tasks, providing consistent test coverage and speeding up the process. A mix of manual and automated testing ensures you catch issues effectively, paving the way for a smoother app experience.

We're entering a new era of software development. Advancements in AI and tooling have unlocked unprecedented speed, shifting the bottleneck from development velocity to quality control. This is why we built — a modern testing platform that ensures your team can move quickly while maintaining a high standard of quality.

Learn more ->